Background

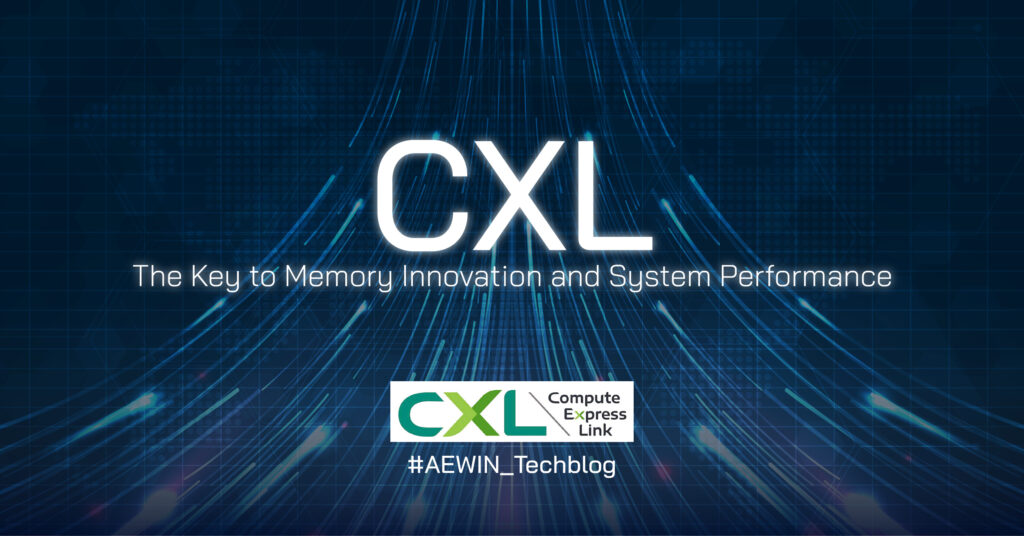

The amount of data has been growing exponentially and memory is key to enable innovative applications including Edge AI, ML, and more. High performance platforms are crucial to access, process, store, and transfer the valuable data in real-time. Higher performance of CPU and memory are demanded while the speed of memory development of high capacity and low latency is far slower than the scaling of core counts in multi-core processors. There is how CXL comes to the market, and we are going to introduce it in this Tech Blog.

Image 1. Slow memory scaling compared to the growth of CPU core counts

What is CXL

CXL refers to Compute Express Link, is an open standard designed for high performance systems to increase memory utilization for better performance. CXL offers a high-bandwidth, low latency path that allows accelerators to access the system resources and enables the system to leverage the memory attached to the CXL device. It provides efficient and coherent communication between CPUs, memory, GPUs, and further accelerators.

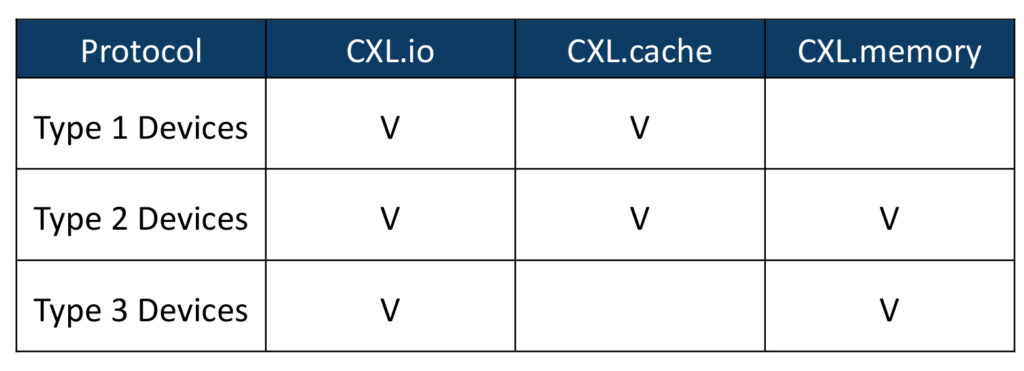

– Protocols

– CXL.io: It is the foundational communication protocol for discovery, configuration, I/O access registration, etc. It is PCIe based and is a must supported protocol for all CXL devices to function.

– CXL.cache: It enables peripheral devices to coherently access and cache host CPU memory for lower latency.

– CXL.mem: The protocol allows host processors to access the memory of peripheral devices for increased memory utilization.

These three protocols together facilitate the coherent sharing of memory resources between host (CPU) and devices (accelerators).

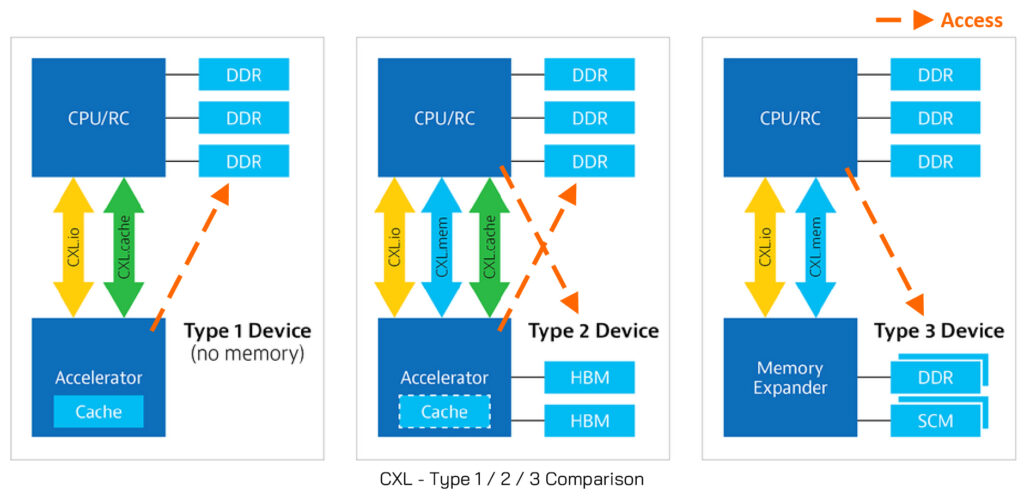

– Device Type

– Type 1: Devices (accelerators) lack local memory such as smart NICs rely on coherent access to host (CPU) memory.

– Type 2: Devices equipped with local memory such as GDDR or HBM, like general-purpose accelerators (GPU, ASIC or FPGA) can leverage CXL to coherently access host CPU’s memory and/or make the accelerator’s memory locally available to the host CPU.

– Type 3: Memory devices including memory expansion boards and persistent memory can be attached via CXL to expand the memory capacity and bandwidth to host CPU.

Image 2. Comparison illustration of Type 1/2/3 device of CXL

Image 2. Comparison illustration of Type 1/2/3 device of CXL

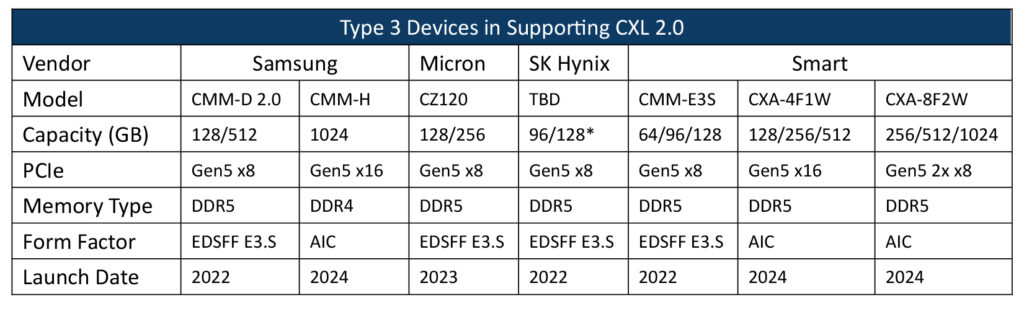

The CXL market is still in its early stages, but it has garnered strong backing from key industry leaders. Several memory vendors began developing E3.S memory with CXL support as early as 2022. Some market players that have produced samples are listed below for reference.

*SK Hynix first launched a 96GB E3.S memory module supporting CXL2.0 in 2022 while a 128GB E3.S version is currently in development.

– Specification

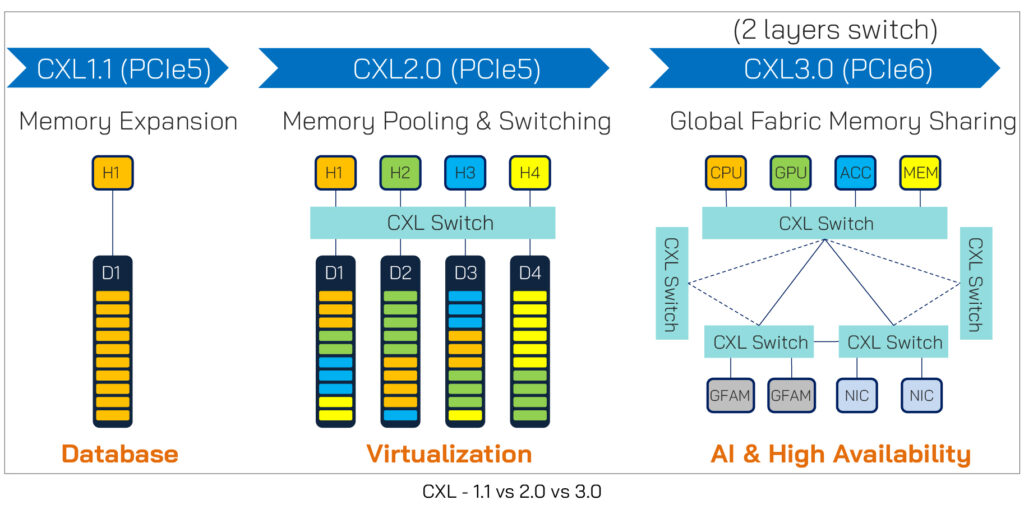

– CXL 1.x (1.0/1.1) CXL 1.0 is based on PCIe 5.0 and allows host CPU to access the device memory for memory expansion which refers to Type 2 applications. CXL 1.1 further enables device-level memory expansion for Type 3 applications and coherent accelerator mode for Type 1 applications. There is a constraint of connection between the host and device that has to be one-to-one.

– CXL 2.0 Supporting CXL single level switching, multiple CXL 2.0 host processors can connect to multiple CXL 1.0/CXL 1.1/CXL 2.0 devices across multiple virtual hierarchies which greatly increases the flexibility of device connection. Memory pooling allows multiple hosts to access shared memory resources while each host being assigned a non-overlapping address range. Each CXL 2.0 device can be divided into multiple logical devices for host processors to access. With efficient distribution of the shared memory resources, better memory utilization can be achieved.

– CXL 3.0 CXL 3.0 has increased transmitted performance and strengthened the flexibility of multiple connections. Based on PCIe 6.0 interface, the transmission bandwidth has doubled compared to CXL 2.0/1.1/1.0 which is based on PCIe 5.0. No limitation for connecting one logical device to one host processor, memory sharing allows multiple hosts to access the same memory. With the support of multi-level switching and fabric capability, it enables large-scale architecture for extremely flexible connections.

Figure 3. CXL 1.1 vs CXL 2.0 vs CXL 3.0

Figure 3. CXL 1.1 vs CXL 2.0 vs CXL 3.0

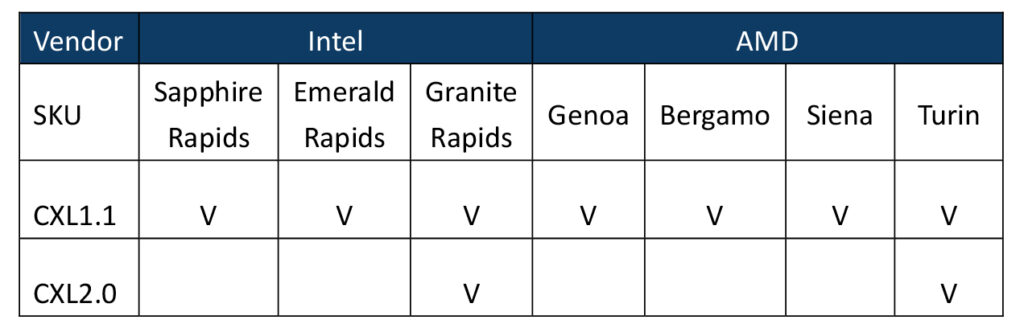

CXL provides efficient and coherent communication between CPUs, memory, GPUs, and further accelerators. To leverage this open standard, both the CPU and connected devices must be compatible with it. Major market leaders, including Intel and AMD, have integrated CXL 1.0+ and CXL 2.0+ functionality into their processors. The support table is listed below as a reference.

CXL is steadily progressing toward widespread adoption and commercial readiness. The majority of current solutions are based on CXL 1.1 or CXL 2.0 and the mass production of solutions supporting CXL 2.0 are expected in 2025.

Summary

CXL is an emerging interconnect technology that enables memory expansion, memory pooling, and memory sharing for optimized memory utilization and better cost efficiency. It plays a vital role in innovative applications like Data Analytics, Edge AI, ML, GenAI, etc., which require efficient processing of massive data volumes.