Introduction

As the technologies develop rapidly, AI has been integrated to various kinds of verticals including Smart City, ITS, Smart Manufacturing, Smart Medical, and more. In this blog, we tested a solution integrated AEWIN hardware and our partner InfinitiesSoft AI Stack software to accelerate GPU load balancing and scaling for inferencing.

Test Setup

AEWIN’s SCB-1932 MEC is a 2U rack-mount hardware networking system. Based on dual 3rd Gen Intel® Xeon® scalable processors, the high-performance platform supports eight channels DDR4 register ECC RDIMM (up to 3200 MHz), and has a maximum memory capacity of up to 1TB per CPU, supporting up to eight Network Expansion Modules or four Network Expansion Modules plus two PCIe x16 full-height, full-length PCIe slots. The maximum capable Ethernet bandwidth is up to 800GbE.

A performant platform for the development and orchestration of machine learning is created through the integration of the AEWIN Edge AI Server and the AI-stack of InfinitiesSoft. The Edge AI Appliance provides the ideal development environment to support industry in creating valuable applications.

| System |

AEWIN SCB-1932C |

| Processor |

2x Intel® Xeon® Gold 5318S CPU @ 2.10GHz |

| DIMM Slot |

16x DDR4 32G=512G |

| OS |

Ubuntu Linux 18.04 (Kernel: 5.4.0-Generic) |

| BIOS |

C1932A003 |

| BIOS Settings |

“Above 4G Decoding”: Enable

“Above 4GB MMIO BIOS assignment”: Enable |

| PCIe Accelerator |

2x NVIDIA T4 |

Figure 1: Test system set-up

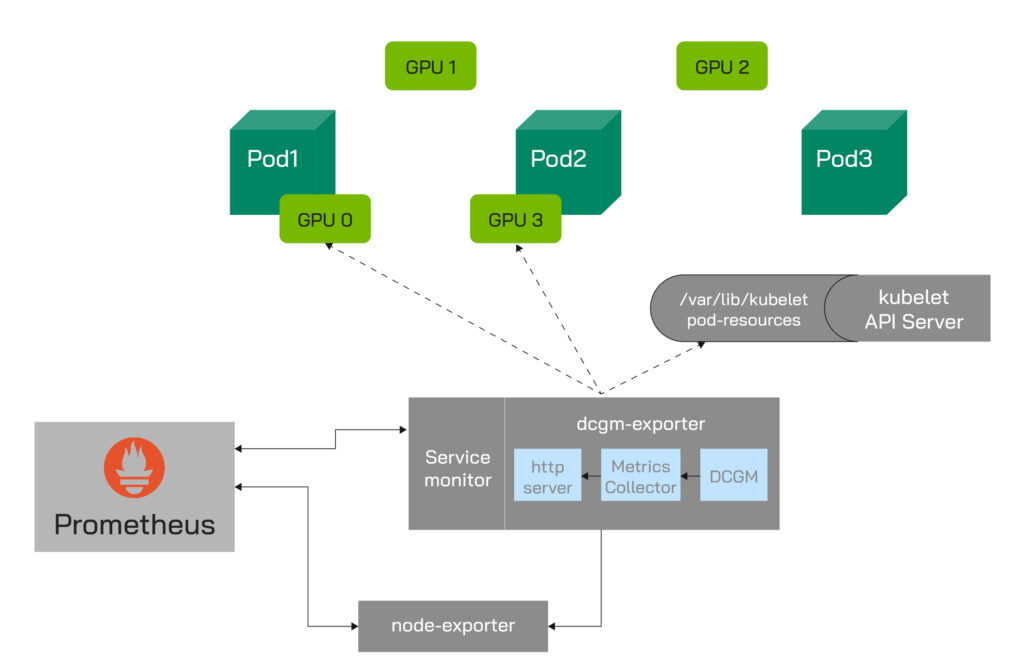

Figure 2: GPU load balancing and scaling

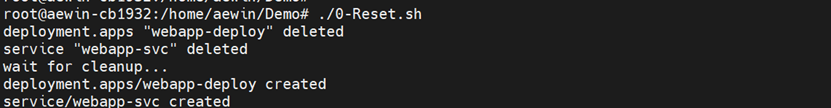

Figure 3: Run the script to activate the demo

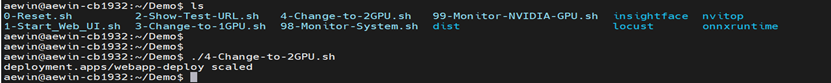

Figure 4: Increase the number of GPU

Figure 5: Increase more workload against more allocated GPUs. e.g. GPUs to concurrent users ratio at 2 to 1, 2 to 4, 2 to 8, 2 to 10.

The results presented in Figure 5 show that AEWIN SCB-1932C Edge AI server with AI Stack, can perform GPU load balancing and scaling for inferencing and deliver linear relationships when we increase the workloads and GPUs.

Summary

In this test, we demonstrated a solution integrated hardware (AEWIN – Intel Xeon Ice Lake SP Edge AI Server) and software (InfinitiesSoft AI Stack) to accelerate GPU load balancing and scaling for inferencing. The integration of AEWIN Edge Server and AI Stack can optimize the use of GPU resources with better efficiency and make AI development/management easy and quick through the interface platform. The AEWIN Edge AI Server with installed AI Stack platform can handle numerous servers to strengthen the total resources for high-efficient operation and to create win-win solutions for the AI applications.